MPC Example

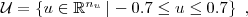

This example shows how to use FiOrdOs for MPC regulation problems of type

![LaTeX

\begin{align*}

\min_{\substack{u_0\ldots u_{N-1}\\x_1\ldots x_N}}\quad

& \tfrac{1}{2}x_N^T P x_N + \tfrac{1}{2}\sum_{k=0}^{N-1} x_k^T Q x_k + u_k^T R u_k

\\

\textrm{s.t.}\quad

&x_{k+1} = A x_k + B u_k\quad&& k=0,\ldots, N-1\\[1ex]

& x_k \in \mathcal{X}\subseteq\mathbb{R}^{n_x} \quad&& k=1,\ldots, N-1\\

& x_N \in \mathcal{X}_f\subseteq\mathcal{X}\\

& u_k \in \mathcal{U}\subseteq\mathbb{R}^{n_u}\quad&& k=0,\ldots, N-1\ ,\\

\end{align*}](lib/exe/imgf797dc1f0c418a6d813fd50abc59a83cf737.png)

where  is the current state of the system.

is the current state of the system.

Let us start with defining some (stable) system and MPC parameters.

A=[0.8 1; 0 0.9]; B=[-1;2]; [nx,nu]=size(B); N=5; Q=eye(nx); R=eye(nu);

Input-only constrained MPC (condensed form)

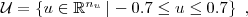

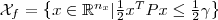

Let us consider a problem with box constraints on the inputs

but no state constraints, i.e.

To obtain a stable closed loop, we take P=dlyap(A',Q) and

The typical procedure is to eliminate the states via

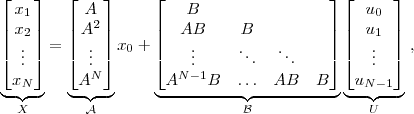

such that the optimization problem can be written in condensed form (the decision variable is the sequence of inputs  only) as

only) as

where

Using FiOrdOs syntax, this problem is specified by

umin=-0.7; umax= 0.7; P=dlyap(A',Q); UU=SimpleSet(N); UU.addSet(1:N,EssBox(nu,'l',umin,'u',umax)); Ai=A; AA=Ai; for i=2:N Ai=A*Ai; AA=[AA;Ai]; end AiB=B; BB=kron(eye(N),AiB); for i=1:N-1; AiB=A*AiB; BB=BB+kron(diag(ones(N-i,1),-i),AiB); end QQ=blkdiag(kron(eye(N-1),Q),P); RR=kron(eye(N),R); H =(BB'*QQ*BB + RR); op=OptProb('H',H, 'g','param', 'c','param', 'X',UU)

where g and c are defined parametric (param), since these entities depend on the initial state  .

.

Next, we set up the solver, generate the code and compile the MEX-interface (requires a compiler to be installed and defined in Matlab).

s=Solver(op) s.generateCode('prefix','mpc1_'); rehash; mpc1_mex_make;

The generated solver can now be used in a closed-loop simulation.

Nsteps=20; trajU=nan(nu,Nsteps); trajX=nan(nx,Nsteps+1); trajX(:,1)=[1;2]; % initial state mparams=struct(); msetgs=struct(); msetgs.algo.maxit=50; for k=1:Nsteps x0=trajX(:,k); % parametric data mparams.g=BB'*QQ*AA*x0; mparams.c=0.5*x0'*(AA'*QQ*AA + Q)*x0; % call solver mres=mpc1_mex(mparams,msetgs); % apply input u_0 to system trajU(:,k) = mres.x(1:nu); trajX(:,k+1) = A*trajX(:,k) + B*trajU(:,k); end % plot results figure(1); clf; stairs(0:Nsteps-1,trajU'); title('inputs'); xlabel('steps'); figure(2); clf; plot(trajX(1,:),trajX(2,:),'*:'); title('state trajectory'); xlabel('x_1'); ylabel('x_2');

Tuning hints

You could for example try the following to possibly improve the performance of the solver.

- Automatic Preconditioning

s.setAutoPrecond('algo')

- Warmstarting

Use previous solution as warmstart for new solver call.

if k>1 msetgs.algo.init=mres.x; % unshifted or ... msetgs.algo.init=[mres.x(nu+1:end);zeros(nu,1)]; % shifted or ... end

- Inline H

Write the matrix-vector multiplication involving

element by element in C.

element by element in C.

s.setSettings('approach','inlineH',true);

- Stopping condition

Stop the algorithm when the function value is

-suboptimal (

-suboptimal ( )

)

s.setSettings('algo', 'stopg',true, 'stopgEps', stopgEps);

with

stopgEps where

where  are the minimal and maximal eigenvalue of the (preconditioned) matrix

are the minimal and maximal eigenvalue of the (preconditioned) matrix  .

(reference)

.

(reference)

- Change maximum number of iterations

Input- and state-constrained MPC (sparse form)

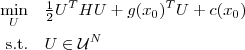

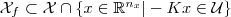

Let us consider a problem with box constraints on the inputs and states

![Math $\mathcal{X} = \left\{ x\in\mathbb{R}^{n_x} \left|\right. \bigl[\begin{smallmatrix}-3.5\\-0.5\end{smallmatrix}\bigr]\leq x \leq \bigl[\begin{smallmatrix}3.5\\2\end{smallmatrix}\bigr] \right\}\ .$](lib/exe/img1dcb279331e40c7a0da8ec535055370c8e35.png)

To obtain a stable closed loop, we take [K,P]=dlqr(A,B,Q,R) and

with

with  maximal such that

maximal such that  .

.

Because FiOrdOs does not support ellipsoids, we make a change of variables for the terminal state by  , such that

, such that  becomes the ball

becomes the ball

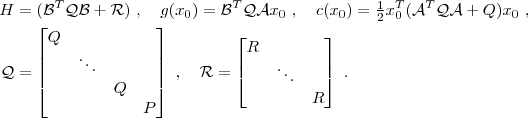

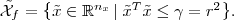

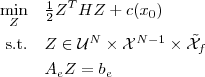

Now, the optimization problem can be written as

where

![LaTeX

\begin{align*}

Z&=\left[\begin{array}{c}u_0\\\vdots\\u_{N-1}\\\hline x_1\\\vdots\\x_{N-1}\\\tilde{x}_N\end{array}\right]\ ,\quad

H = \left[\begin{array}{ccc|cccc}

R & & & & & & \\

&\ddots& & & & & \\

& & R & & & & \\\hline

& & & Q & & & \\

& & & &\ddots& & \\

& & & & & Q & \\

& & & & & & I \\

\end{array}\right]\ ,\quad

c(x_0) = \tfrac{1}{2}x_0^T Q x_0 \ ,\quad\\

A_e &= \left[\begin{array}{ccccc|ccccccc}

-B & & & & &I & & & & \\

&-B & & & &-A & I & & & \\

& &\ddots& & & &\ddots & \ddots & & \\

& & &-B & & & & -A & I & \\

& & & & -B & & & & -A & P^{-1/2}

\end{array}\right]\ ,\quad

b_e(x_0)=

\left[\begin{array}{c}

A\\

0\\

\vdots\\

0\\

0

\end{array}\right] x_0 \ .

\end{align*}](lib/exe/imgb50cd36cb9395f2f1bc03e55cc865cf30b3b.png)

Using FiOrdOs syntax, this problem is specified by

umin=-0.7; umax= 0.7; xmin=[-3.5;-0.5]; xmax=[3.5;2]; [K,P]=dlqr(A,B,Q,R); gam=min([xmax;-xmin;umax;-umin].^2 ./ sum(([eye(nx);-eye(nx);-K;K]*P^(-1/2)).^2,2)); ZZ=SimpleSet(2*N); ZZ.addSet(1:N,EssBox(nu,'l',umin,'u',umax)); ZZ.addSet(N+(1:N-1),EssBox(nx,'l',xmin,'u',xmax)); ZZ.addSet(N+(N),EssBall(nx,'r',sqrt(gam))); H =blkdiag(kron(eye(N),R), kron(eye(N-1),Q),eye(size(P))); Aeu=kron(eye(N),-B); Aex=blkdiag(eye((N-1)*nx),P^(-1/2)) - kron(diag(ones(N-1,1),-1),A); Ae =[Aeu,Aex]; op=OptProb('H',H, 'c','param', 'X',ZZ, 'Ae',Ae, 'be','param')

where c and be are defined parametric (param), since these entities depend on the initial state  .

.

Next, we set up the solver, generate the code and compile the MEX-interface. Note that by default, FiOrdOs chooses the primal-dual approach for this problem.

s=Solver(op) s.generateCode('prefix','mpc2_'); rehash; mpc2_mex_make;

The generated solver can now be used in a closed-loop simulation.

Nsteps = 20; trajU=nan(nu,Nsteps); trajX=nan(nx,Nsteps+1); trajX(:,1)=[1;2]; % initial state mparams=struct(); msetgs=struct(); msetgs.approach.apprMaxit=200; for k=1:Nsteps x0=trajX(:,k); % parametric data mparams.c=0.5*x0'*Q*x0; mparams.be =[A;zeros((N-1)*nx,nx)]*x0; % call solver mres=mpc2_mex(mparams,msetgs); % apply input u_0 to system trajU(:,k) = mres.x(1:nu); trajX(:,k+1) = A*trajX(:,k) + B*trajU(:,k); end % plot results figure(3); clf; stairs(0:Nsteps-1,trajU'); title('inputs'); xlabel('steps'); figure(4); clf; plot(trajX(1,:),trajX(2,:),'*:'); title('state trajectory'); xlabel('x_1'); ylabel('x_2');

Tuning hints

You could for example try the following to possibly improve the performance of the solver.

- Warmstarting

Use previous solution as warmstart for new solver call.

if k>1 msetgs.approach.apprInitX = mres.x; msetgs.approach.apprInitLa = mres.la; end

- Inline A

Write the matrix-vector multiplications involving

element by element in C.

element by element in C.

s.setSettings('approach', 'inlineA',true);

- Stopping criterion instead of fixed iteration number

Stop the approach whenever the gradient-based stopping criterion is true:

s.setSettings('approach', 'stopg',true, 'stopgEpsPrimal',1e-3, 'stopgEpsDual',1e-3);

- Balance primal/dual step size

The primal-dual approach has a tuning parameter '

balancePrimalDual'. If this parameter is >1, more emphasis is put on dual progress whereas if it is <1, primal convergence is emphasized. A reasonable (positive) value can be found empirically, e.g.s.setSettings('approach', 'balancePrimalDual', 1.1);

- Reduce the maximum number of iterations

Check by inspection if a maximum of 100 iterations still gives acceptable control performance.

msetgs.approach.apprMaxit = 100;

!!! This document is stored in the ETH Web archive and is no longer maintained !!!